The Aim of Digitising: More than just Reading Texts

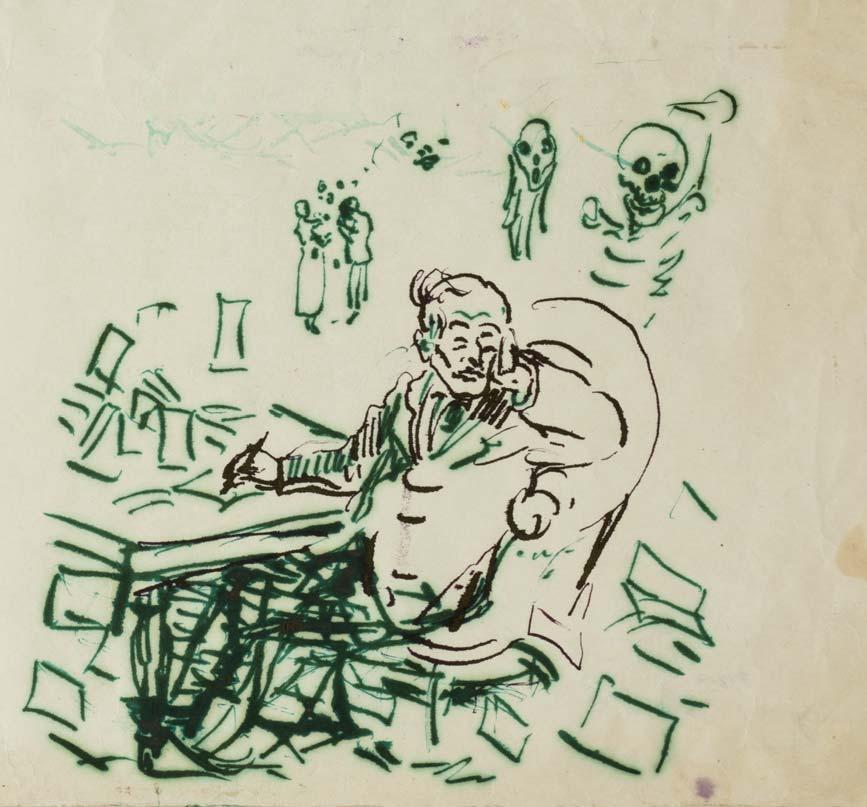

ILL. 26. EDVARD MUNCH AT HIS DESK 1925–30

The digital archive of Edvard Munch’s collected writings eMunch.no contains transcriptions of texts together with facsimiles of all the manuscript pages. Ordinarily, when one publishes a modern writer’s works, one has a quantity of printed books published in the writer’s lifetime which the new edition is organised around. Archival material, such as manuscripts, is then linked to these printed books as originals and the basis for commentary. Letters have an in-between position; most of them were not published in the writer’s lifetime, but have been given a finished form, which is then sent off to the recipient. In Munch’s case, only a few of his letters and manuscripts were previously published. What we have then is primarily a collection of documents that are not connected with published texts.

Munch’s archive was built up by the artist himself in that he kept all of his papers and records. He used it in his daily work, both in resolving practical issues such as tax questions, and in his artistic endeavours. This archive was subsequently, i.e. through Munch’s will, donated to the City of Oslo and became part of the collection housed in the Munch Museum.1 In this way Munch himself contributed to redefining the archive from being his own working tool to becoming a Munch archive for posterity.

How is the publication of Munch’s archive organised in the digital archive eMunch. no? It has been based on two different traditions; the tradition of scholarly editing and the museum tradition.2 The first emphasises the texts as literary works, while the latter emphasises the texts as historical resources where one views text segments, such as names and dates, as indicators pointing to historical persons, institutions, places, points in time and events. In addition, it is important to present the graphic aspects of the manuscripts, which often contain visual elements. This is done by giving digital facsimiles a central place, and direct links are inserted between a transcribed text and segments of a facsimile page.

In this article I will outline some of the major features in the development of the tradition of scholarly editing and the museum tradition seen in light of the development of various areas of usage for digital texts. In conclusion, I will come back to the work on the Munch project and the results being achieved.

The Tradition of Scholarly Editing: Father Busa and the Computer as a Textual Tool

Towards the end of the 1940s the Jesuit monk Robert Busa started working on a concordance of the works of Thomas Aquinas.3 He was the first to make use of computers to create printed concordances, but handwritten concordances have been compiled since the middle of the 13th century.4 With the development of computer technology it became clear that one could develop a digital text which represented the work one was working on as an ongoing text, and generate simple concordances based on it. This idea can be seen in KWIC concordances (keyword in context), which was described for the first time in 1959.5 In a KWIC concordance the result of a search in a collection of texts is shown as a number of results where each result, in addition to the word that was searched, also includes a few connecting words before and after.

The aspect of interactivity is just as important as the display format in such systems. Interactive systems for text analysis had a breakthrough during the 1980s and 90s in the form of a number of PC-based tools.6 This allowed one to obtain a quick series of questions – answers – and new questions. One no longer had to wait for data systems which, due to costs and the expenditure of time – could not be run whenever one wished. An exciting development in recent years is the web-based systems for text analysis, where one can either use analysis tools on texts that are available on the internet, or one can upload one’s own. In this way one avoids having to install the systems on one’s own computer. A good example of this is the Canadian system Tapor.7

A digital text could be used for more than forming the basis for concordances. Of particular importance was the fact that one could produce printed books based on the same data material that one used for the concordances. This is still a core issue of working with digital texts: A digital base document is the point of departure for various forms of use, from printed and digital text editions via KWIC concordance-based search systems to experimental tools for examining texts, and even educational games based on text.

Text Encoding as a General Method

In order to make this possible, a general tool for the various processes has to be developed. It is not always true that general software tools can solve a task, but they can often do at least 90% of the job, so that by using such tools one can concentrate on the few tasks that are particular to one’s own work. One condition of general tools is that many institutions, projects and individuals work in more or less the same way. This is one of the reasons why the Text Encoding Initiative (TEI) was initiated in 1987.8 TEI is today a member organisation that has made available helping aids for the entire process from source material to various types of editions. Of central importance is a set of instructions for text encoding, which also contains a formal encoding scheme that can be used to validate the structure of the digital text. They also offer a number of tools that create websites and typesetting based on TEI documents. Projects having simple needs can use these tools directly, others must make adjustments in accordance with their needs.

TEI encoding is a type of XML encoding. This implies that general tools for all XML users can also be used. The codes that are inserted in the text can represent everything from the structure of the document, such as chapters and paragraphs, to the encoding of its contents, which allows one to mark such things as proper names, dates and events described in the text. There are a number of modules that can be used as required. One example of this is the changes made in a manuscript, such as crossing out, corrections and inserts, which there is a rich language to describe.

What is common for all of this is that things which exist implicitly in the text, and which are understandable to a competent reader, are made explicit. In an explicit form it can then be treated by a computer. This means that it is possible to make numerous versions of the text material. One example is lists of names, another is editions where the crossed out text is marked with a line through the word, a third an edition where the text is normalised by invisibly incorporating all of the changes. As one will understand here, this type of encoding implies various levels of interpreting the text. What is encoded is one or several specific readings of a text.

Text Analysis

The analysis of texts has always been closely associated with reading. And it will continue to be so. But there are a number of helping aids for text analysis that open up new possibilities. We have looked at a group of such helping aids above, namely; concordances. In this section we will take a look at methods that are based on various statistical tools.

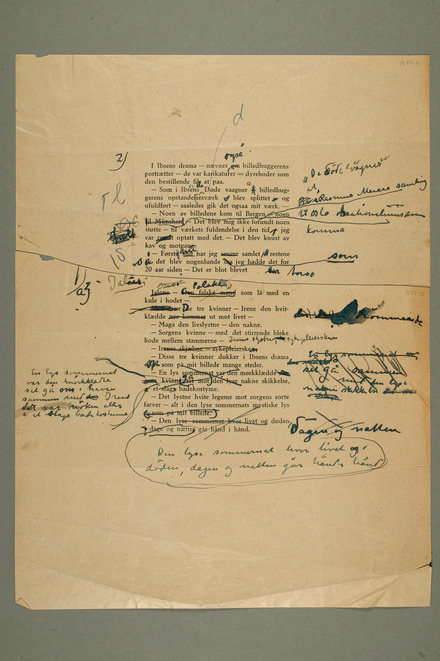

ILL. 27. PROOFS FOR THE BOOKLET THE ORIGINS OF THE FRIEZE OF LIFE 1928–29, MM N 77-4

Although attempts at analysing texts by counting the length and frequency of words were made at the close of the 19th century,9 the use of statistics in the analysis of texts was made possible on a greater scale when the computer treatment of texts was taken into use. When one uses statistical methods for analysing data, it is extremely important that one is knowledgeable about the methods one uses as well as the significance of the answers one finds.10 It is helpful to divide the use of such methods in two. To begin with are the statistical methods where the results are used directly. One example of this is author attribution. A task that can be solved with the help of such methods is for example a text one assumes is written by one of two writers, but without knowing which of the two. In that case one analyses certain phenomena in a set of texts written by each of the writers – for instance the use of prepositions – to discover a typical pattern, a “fingerprint”, for each of them. One then does the same thing with the unknown text to find out which of the authors’ patterns the unknown text resembles the most. This then becomes an indication of who the author might be. How strong this indication is, has to be evaluated based on the statistical criteria. For this type of work it is important that the statistical methods are fully understood. It is very simple to use today’s advanced statistical tools to produce neat visualisations that can be misinterpreted in many different ways. One has to know what one is looking at.

On the other hand, one can make use of similar methods even though one is not well-versed in statistics. One can use statistical tools to search more or less methodically in a text or a collection of texts. One can for example see if there are any word pairs that occur more often in the same sentence in one text as opposed to other texts. One can then use the results of such investigations of texts to read the texts with new eyes. The results that one arrives at can thus be justified through the reading, and do not necessarily have to be built directly on the statistical results. This can be compared to informal discussions about literature. One does not use the results of the discussions one has had in a pub directly, yet it is totally legitimate to use ideas that arise during the late hours of the night as the basis for posing new questions about a text.

Reading a Million Books

After the phenomenon Google Books appeared in 2004, many scholars began to ask themselves, and others, whether this could be used in more ways than just finding quotes. One of them was Gregory Crane, a veteran of digitising through his many years as head of the Perseus Project.11 In an article from 2006 he posed the question of what one could do with a million books.12 He began with the premiss that large book collections could not be read by individual readers, and looked at the opportunities that were now available to do research on such large collections.

One possible answer came from Stanford University this year: Matthew L. Jockers gave his students 1200 novels to work with. To work with – but not read.13 As an experiment they used computer programs to study the development of literary style over time, based on a method that Franco Moretti calls “distant reading”. This is of course controversial, as many fear that it will cause the humanity to fall out of the humanities.

For the time being, I consider this work to be an experiment in methodology. A reader can do what she wishes with a text, if it can contribute to understanding it better; reading it backwards, chewing it into bits in a computer and then reassembling them in new ways, reading the first letter of each word or in each line. But if the results of these readings are to have any research value, one must justify one’s results as one does in all research. Perhaps in a few years we will find out if Jockers’s distant reading produces any results, or if it will remain standing as a cul-de-sac in the history of research methodology.

The Museum Method: Integration and Information

During the 1990s the CD-ROM was a common platform for publishing digital material. This implied an integration of information internally, with hypermedia systems that connected the different parts of the material with hyperlinks on a CD-ROM disc, but with no links to any external material. The integration of different resources became simpler around the middle of the decade, when the World Wide Web became a standard platform for the exchange of digital information, at the same time that the Internet became established as the dominating global digital network system, also for commercial entities.

The Norwegian Documentation Project began the work of digitising in 1991. Even though a major part of the work on the project was concentrated around the digitising of paper-based university archives, a number of publication solutions were developed. One of these coupled information from Diplomatarium Norvegicum (22 volumes containing the texts of documents and letters from Norway older than 1590) with the Norwegian archaeologist Oluf Rygh’s Norske Gaardnavne (Norwegian farm/ estate names) and archaeological museum catalogues. The system was presented by Christian-Emil Ore at a conference in 1997.14 What was innovative about the system was not that it created links between different sources on the web, but that these links were generated automatically based on codes that were inserted in the digital texts.

Here we can see that implicit information that is made explicit can also be used to create networks of links. The lecture from 1997 thus presaged the development of what is called the Semantic Web today.15 This development has accelerated during the 2000s and today we have effective methods and standards for such links, which will lead to a web of data that will be in addition to the web of documents we know as the World Wide Web. One problem remains, however: The codes that designate the data which is to be interpreted and linked together has to be inserted, with explicit information about what they signify. A lot of work is being done to develop methods for doing this automatically, not least financed by Google in connection with their work with digitising, but the automatic methods are thus far still encumbered with large flaws. The work associated with the manual encoding that is being done in the Munch project is therefore an extremely important foundation for the future development of such systems.

What about Munch?

Any publication project must have the unique characteristics of the material that is being published as its point of reference. As we saw in the introduction, Munch’s texts have been treated both in the tradition of scholarly editing for the publication of literary texts, and in a museum tradition where archive material is seen as historical resources. The most important result is that the texts will be available for reading. The use of TEI encoding also makes its possible to create versions for other purposes. A TEI document can be easily converted to formats that are compatible for importing to systems for distant reading, statistical analysis and various types of visualisations. One example of this is the timelines in the experimental area of the Munch material.16

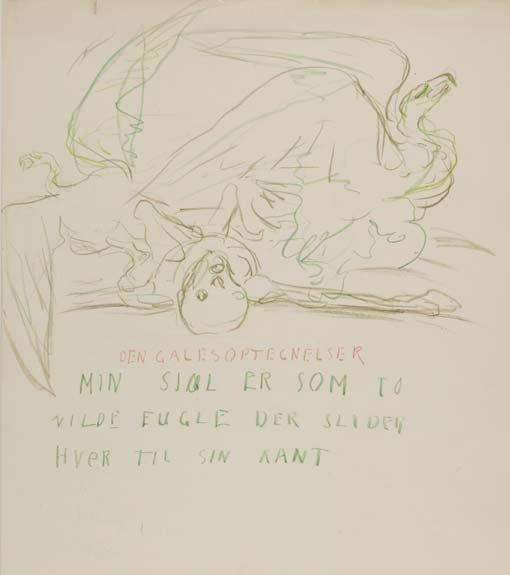

CAT. 64. NOTES OF A MADMAN. MY SOUL IS LIKE ... 1930–35

Free text searching is always useful. But Munch’s orthography is not easy to search in. This is partly due to the fact that the texts have not been through the normalising process that paper editions have, and partly due to Munch’s own writing style (cf. Hilde Bøe’s article). Computer assisted text analysis, as we have seen it described above, is also made difficult by Munch’s orthography. This is not an unknown problem, however: all such analysis of texts, whether one uses concordances, statistics or other digital methods is based on a systematising of the base text. An example of this is lemmatisation, where one finds the “dictionary form” of every single word in a text. Divergent orthography increases the need for manual participation in this preliminary work.

The development of tools for simple analysis and visualisation in eMunch.no makes it possible for common users to analyse the text material. This is in keeping with the idea behind the Tapor Project mentioned above. In this context, a closer collaboration with other institutions can be an exciting working method, for instance via a close integration between the Munch material and the methods in a system such as Tapor. Through the TEI CAT. 64. encoding of names, dates and more, together NOTES OF A MADMAN. MY SOUL IS LIKE ... 1930–35 with the indexes that have been compiled in the work with the project, Munch’s material can be linked to other resources, such as Norsk kunstnerleksikon (The Norwegian Encyclopaedia of Artists).17 With time one can build up a network of information that is cross indexed. For instance one can look at historical photographs of places where Munch wrote letters. This is an area full of problems, however; not necessarily problems that must be solved, but ones that must be evaluated and taken into consideration. How can we know when and where a letter was written? How can an uncertainty that is indicated in the text encoding be presented in a computer network of this kind?

There are fascinating possibilities in the thematic encoding that is being conducted on Munch’s texts. Yet what type of links will it be reasonable to couple with external material in the semantic web in the future? Is it reasonable to create links between Munch’s literary figure Vera and Hans Jæger’s literary figure Vera? Furthermore, is it reasonable to link these characters to the historical person Oda Krohg? In my opinion it is important to use the classifications that are inherent in the encoding and indexes within reason. The world can obviously not be divided into either sure knowledge or surmises, but there are certain things that are surer than others. When one encodes the exhibitions that are mentioned in Munch’s business letters, this is different from a type of encoding that indicates a connection between a specific text and a painting. One should not refrain from publishing hypotheses in a digital world, just as one did not refrain from writing about them in books. But it is highly important that the digital method distinguishes between what the publisher, in our case the Munch Museum, considers to be relatively uncontroversial facts, and speculations that are generated by individual writers or scholars.

Conclusion

In the digital treatment of Edvard Munch’s writings, these are treated both as literary texts and as historical source material. This choice opens for diverse readings and interpretations of the texts. When considered as texts belonging to different genre categories they can be read, analysed and compared to other texts. Analyses and comparisons can be aided by various methods used in digital humanities research, and the material can be included in distant reading studies. As historical source material the project has created a solid foundation for linking the information one finds in the texts with other information from other sources, whether they are texts, data bases or other.

Notes

1 Bøe, Hilde 2009: “eMunch.no – om tekniske og praktiske løsninger i arbeidet med et digitalt arkiv for Edvard Munchs tekster”. Website: http://www.emunch.no:8080/cocoon/emunch/ABOUT2009_Boee_NNE-talk.xhtml (last checked 2010-09-30).

2 The last-mentioned museum method is also closely associated with archives. But the inspiration for this project stems from the museum sector, in particular from the current work in CIDOC, ICOM’s international committee for documentation. Website: http://cidoc.mediahost.org/ (last checked 2010-09-29).

3 Busa, Robert, SJ 1998: “Concluding a life’s safari from punched cards to World Wide Web”, pp. 3–11 in: The Digital Demotic: A Selection of Papers from DRH97. A concordance is an alphabetical vocabulary of words that are found in a text, often with supplementary information about each word.

4 McCarty, Willard 1993: “Handmade, Computer-Assisted, and Electronic Concordances of Chaucer”, pp. 49–65 in: Computer-Assisted Chaucer Studies.

5 Luhn, H.P. 1966: “Keyword-in-Context Index for Technical Literature (KWIC Index)”, pp. 159–167 in: Readings in Automatic Language Processing.

6 TACT, developed by John Bradley, is an early example of such interactive systems.

7 Website: http://portal.tapor.ca/portal/portal (last checked 2010-09-29).

8 Website: http://www.tei-c.org/ (last checked 2010-09-16).

9 Hockey, Susan 2004: “The History of Humanities Computing”, pp. 3–19 in: A Companion to Digital Humanities.

10 Oakes, Michael P. 1998: Statistics for Corpus Linguists.

11 Website: http://www.perseus.tufts.edu/ (last checked 2010-09-16).

12 Gregory Crane: “What Do You Do with a Million Books?”, in: D-Lib Magazine, 12 (2006), no. 3. URL: http://www.dlib.org/dlib/march06/crane/03crane.html (last checked 2010-09-16).

13 Marc Parry: “The Humanities Go Google” in: The Chronicle of Higher Education, May 28, 2010. URL: http://chronicle.com/article/The-Humanities-Go-Google/65713/ (last checked 2010-09-16).

14 Ore, Christian-Emil 1998: “Making Multidisciplinary Resources.” Pp. 65–74 in: The Digital Demotic: A Selection of Papers from DRH97.

15 World Wide Web Consortium is a major actor in this sphere: http://www.w3.org/standards/semanticweb/ (last checked 2010-09-29).

16 [Currently not available online.] Website: http://emunch.no/test/ (last checked 2010-09-29).

17 A digital edition of the encyclopaedia is under development, cf. ABM-utvikling 2008: “Norsk kunstnarleksikon på nett” [press release]. Website: http://www.abm-utvikling.no/for-pressen/pressemeldinger-1/norsk-kunstnarleksikon-pa-nett.html (last checked 2010-09-30).

Translated from Norwegian by Francesca M. Nichols